blplaybills.org: better search results using LLMs

Search for, view and download 300 years of theatre posters from Great Britain and Ireland, 1600–1902.

7 October 2025Search for, view and download 300 years of theatre posters from Great Britain and Ireland, 1600–1902.

7 October 2025

Blog series Digital Scholarship blog

Author Sak Supple (guest blog post)

The blplaybills.org website provides a way to search for, view and download 300 years of theatre posters from Great Britain and Ireland, 1600–1902, as curated by the British Library (BL).

The site relies on extracting text from scans of historical documents using a machine learning technique called Optical Character Recognition (OCR). Historically, this has been a pattern matching technique that compares patterns on the document with image patterns known to the technology.

This can produce reasonable results, but there are a number of problems when used with historical documents that can lead to unexpected results:

Despite these challenges, blplaybills.org used open source OCR projects like Tesseract and Textra to produce usable content that has given researchers valuable access to the playbill collection.

However there is now another option: using modern LLMs to do the job.

This post discusses how experiments using modern Large Language Models (LLMs) for OCR led to significantly improved text extraction from playbill scans, which means that blplaybills.org now provides more accurate search results.

The pace of change in the world of LLMs is rapid.

In the last six to 12 months a number of LLMs have proven to be especially adept at character recognition, and they are now used routinely for extracting text in personal settings (photographs and receipts on your phone) and commercial applications (invoices, document summary, RAG – Retrieval-Augmented Generation).

My application is very simple in comparison, and as we shall see this simplicity created problems for the LLMs.

The general approach to evaluate a number of LLMs was as follows.

After initial research, the short-listed models were:

I’ve excluded OpenAI models since although one was originally on the short list, I never got as far as testing it. More on that later.

There are a number of challenges using larger models with a higher number of parameters like qwen/qwen2.5-vl-72b-instruct and mistral-large-latest. In practical terms the main issue is that these models will only run on powerful hardware that is not readily available, locally or remotely, to every organisation.

One good solution to this is to access the models on remote hardware, using an Application Programming Interface (API) provided by a third party. So test software was written to use APIs from OpenRouter (provides a unified API to access many AI models), allowing me access to the models on their hardware.

The commercial offering from Google could only be used with an API, and costs for using it are relatively low compared to some of the competition.

Tests were done and the final winner was gemini-2.5-flash.

Reasons for excluding Qwen and Mistral LLMs:

The Google model, gemini-2.5-flash, did not exhibit these problems and the OCR text was close to perfect, even when presented with very poor scans and difficult to process multi-column layouts.

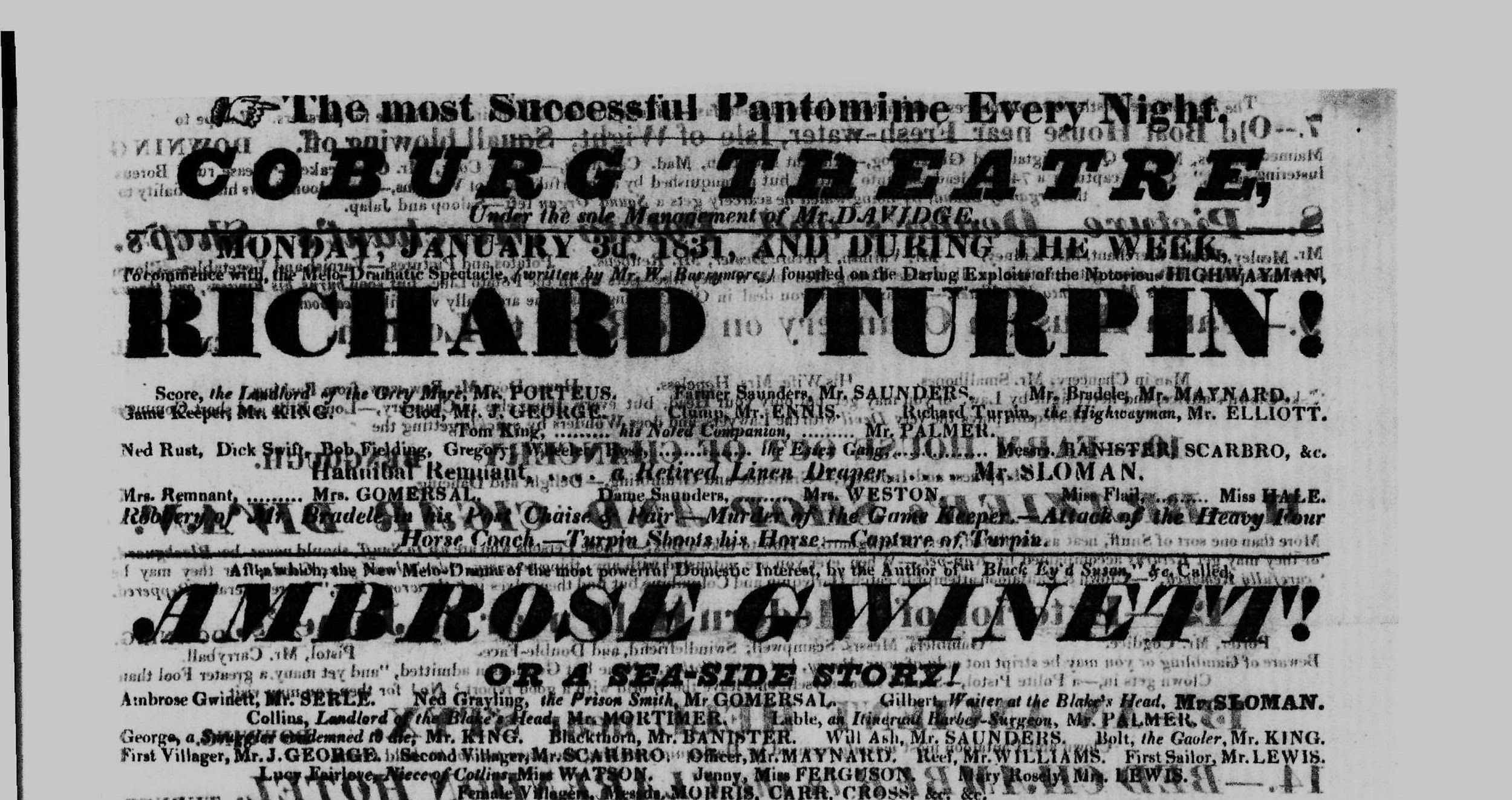

Consider this extract from a playbill image:

The previous OCR software produced this extract:

I The most Successi Pantomime Buer Nishio

COBURG

The sp

SO MEDAKIDCE

สงONPLURNUNK

831 AND DURING.

ariug Ex

RICHAR

HIGHWAYMAN,

I TURPIN!

sundere

•SAUNDER

ghosyman, Mr.

dele, Mr, MAr. ELLIOTT.

T PALN

sheelert

ANISFER SCARBRO, &c.

Hetines

MAN.

Mrs. Remnant,

Torse Chach

BRO

เธอไร loo i roteng e.usata bus"

The updated LLM workflow produced this extract:

The most Successful Pantomime Every Night.

COBURG THEATRE

Under the sole Management of Mr. DAVIDGE

ON MONDAY, JANUARY 3d, 1831, AND DURING THE WEEK,

To commence with the Melo-Dramatic Spectacle, written by Mr. W. Barrymore, founded on the Daring Exploits of the Notorious HIGHWAYMAN,

RICHARD TURPIN!

Score, the Landlord of the Orry Mere, Mr. PORTEUS. Farmer Saunders, Mr. SAUNDERS.

Game Keeper, Mr. KING. Clump, Mr. ENNIS. Richard Turpin, the Highwayman, Mr. ELLIOTT.

Ned Rust, Dick Swift, Bob Fielding, Gregory, &c. Mr. Bradley, Mr. MAYNARD.

Hannibal Remuant, a Retired Linen Draper, Mr. SCARBRO, &c.

Mrs. Remnant, Mrs. GOMERSAL. Dame Saunders, Mrs. WESTON.

Robbery of the MAIL Coach,—A HORSE Race,—A desperate Chaise Affair—Turpin Shoots his Horse,—and Capture of Turpin.

There is a significant improvement.

At this point I was satisfied with the results and decided to abandon testing the OpenAI model.

Instead, my work was redirected into creating a workflow to use gemini-2.5-flash to process all 84000+ playbills and index the content for the live site.

Generally speaking, when ‘talking’ to a LLM via an API, we can send a ‘prompt’ together with the data that we want the model to work with.

Think of the prompt as a set of instructions to help the LLM understand our intentions better, and therefore return a response that better suits our needs. These instructions can specify things we want it to include in the response, and crucially, also what we want it to exclude.

When deciding on a prompt I consider the following:

My experience is that these guidelines do not work consistently for all models, but they worked well with the Gemini models.

A key aspect of the success of the Gemini model was that it responded well to prompt changes, so although the final prompt was quite simple, the model did respect it.

The prompt is:

Perform OCR on the following image.

IMPORTANT:

- Return ONLY the text contained in the image, in the order it appears.

- Do not add any summary, context, analysis, or introductory text like "The text in the image is:".

- The image is a historical document and may use unusual fonts, have variable lighting, and contain artifacts.

- Be aware of historical letterforms, such as the 'long s' (ſ), and transcribe it as a modern 's'.

With hindsight, I might add more detail about how to handle diphthongs, but as someone with a print background, I enjoy seeing them unchanged in the final text.

A crucial part of this approach is to spend time experimenting with different prompts, understanding how each changes the behaviour of a specific model, and iteratively changing the prompt till consistent results are achieved.

A new workflow was designed that used Google APIs to OCR all playbills in the collection and send updated text and metadata to the database for indexing.

Key points of the implementation:

blplaybills.org now uses this new text and the quality of search results has been transformed.

The Gemini models are commercial, closed source and are constantly updated.

The implications of this are:

A useful piece of work might be to experiment with non-commercial models:

If this approach produces good results, then a more generalised workflow to apply these techniques to other types of English or multilingual content might look like this:

In the meantime, researchers who use blplaybills.org have a better chance of finding what they’re looking for than was the case a couple of weeks ago.

This blog is part of our Digital Scholarship series, tracking exciting developments at the intersection of libraries, scholarship and technology.

You can access millions of collection items for free. Including books, newspapers, maps, sound recordings, photographs, patents and stamps.